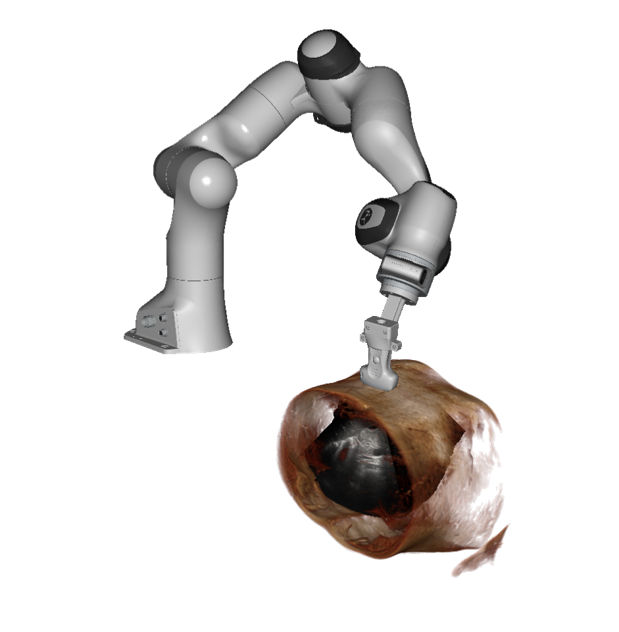

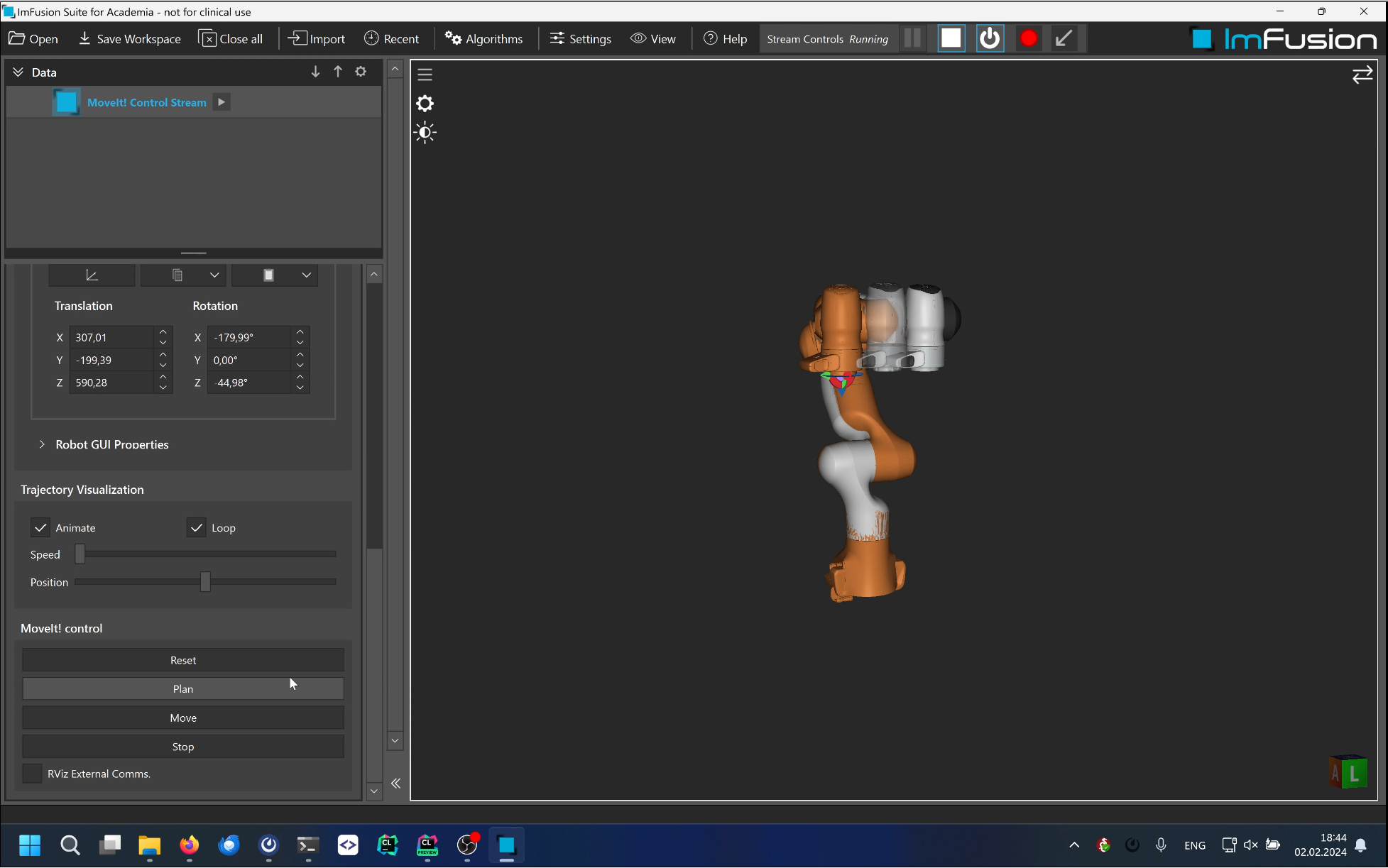

The ImFusion Suite is well equipped to support the full workflow of common interventional scenarios. For the guidance of tools such as needles, available interfaces to tracking systems integrate well with other software modules for fast registration to pre-interventional imaging data and live freehand ultrasound to achieve a comprehensive and interactive real-time visualization.

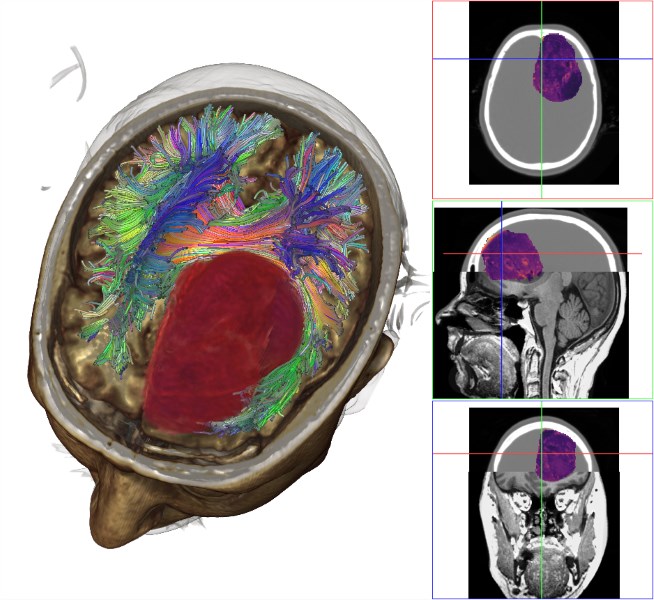

Regularly, the first step of clinical interventions is an extensive image analysis and planning step, often involving segmentations of important structures and the annotation of target point and insertion path. In the interventional theater, a tracking system comes into play. The ImFusion Suite currently supports many common proprietary optical and electromagnetic systems (including NDI Polaris(TM), NDI Aurora(TM), Ascension driveBAY/trakSTAR(TM), Atracsys FusionTrack + SpryTrack, OptiTrack system such as the V120), and has a bi-directional OpenIGTLink communication interface for full flexibility.

To establish the registration between pre-interventional images and the patient in the interventional theater, the software's registration module allows for both intensity-based registration based on available on-site imaging and feature-based registration using fiducials and a pointer device.

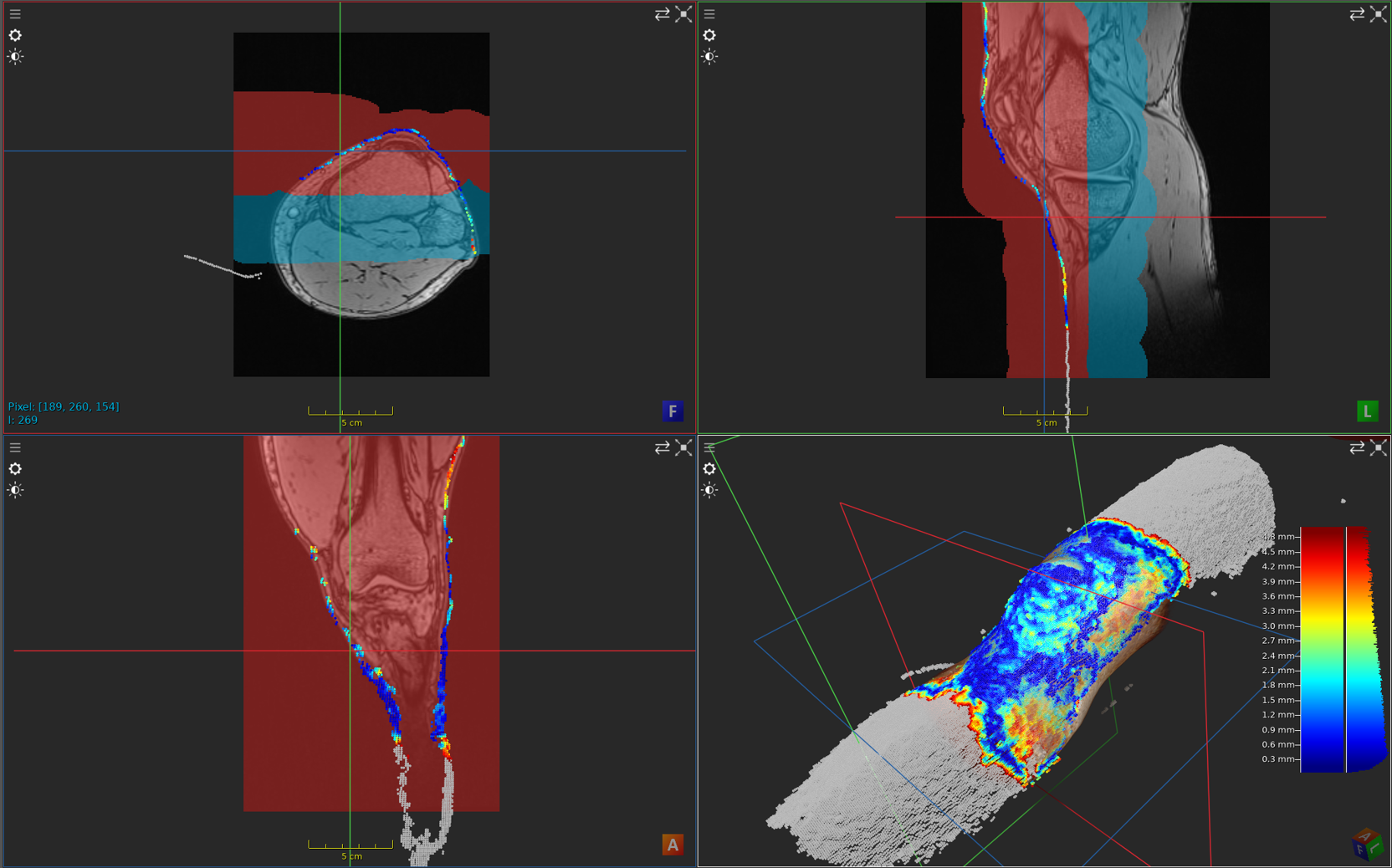

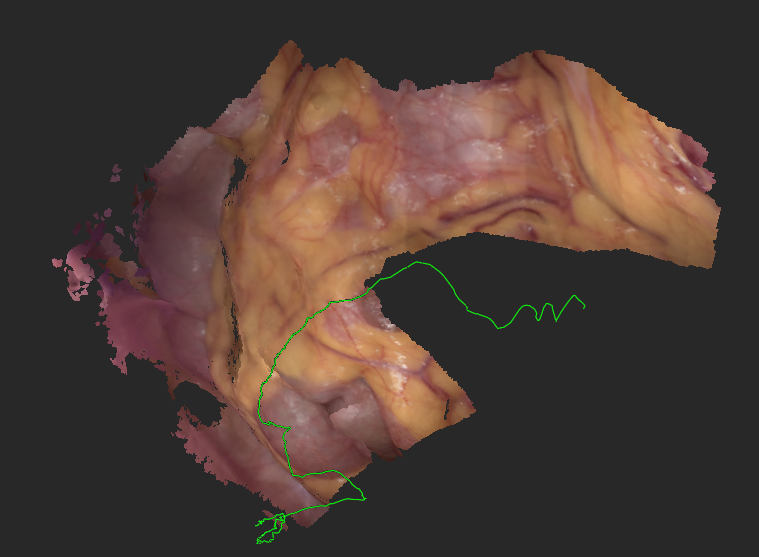

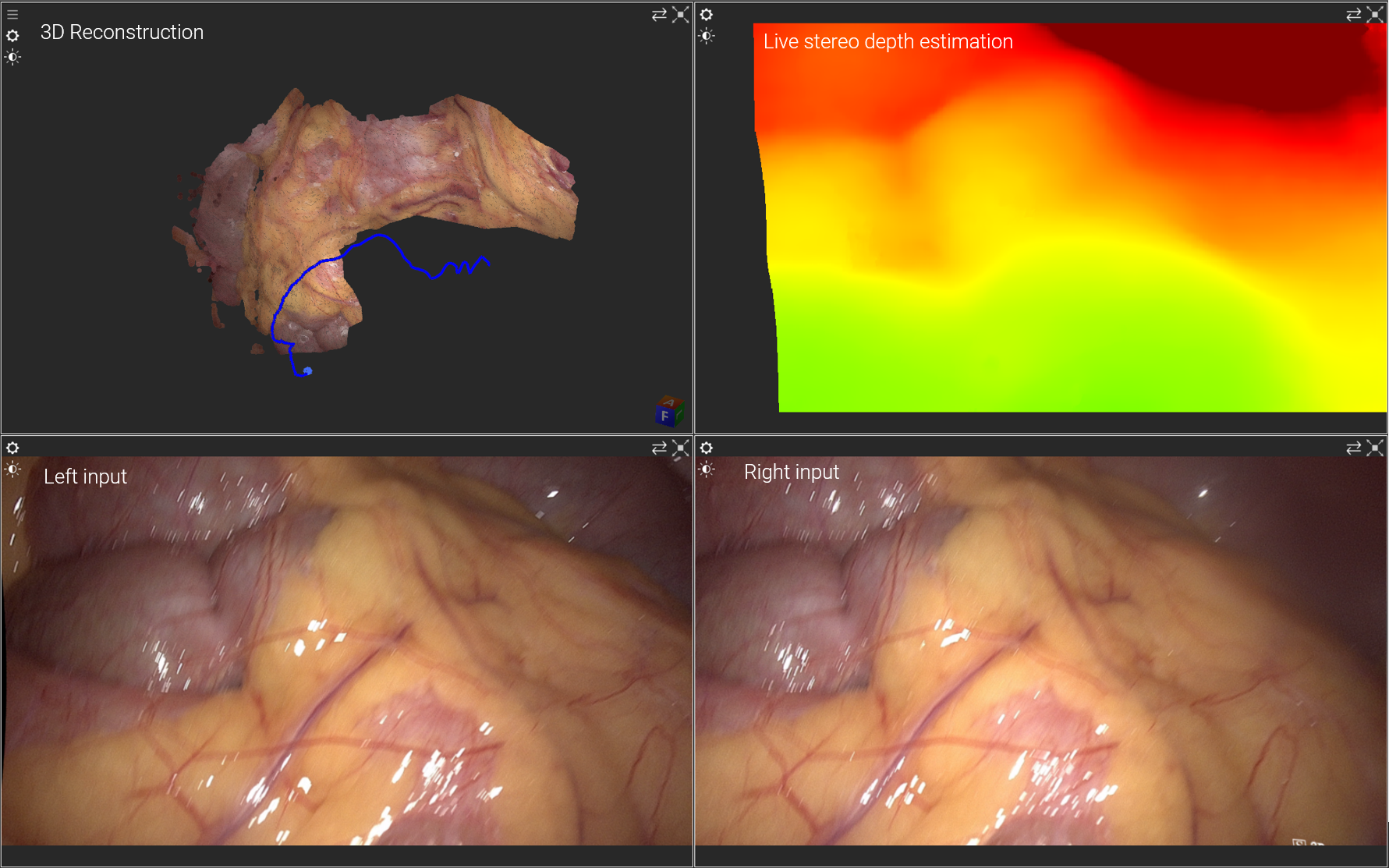

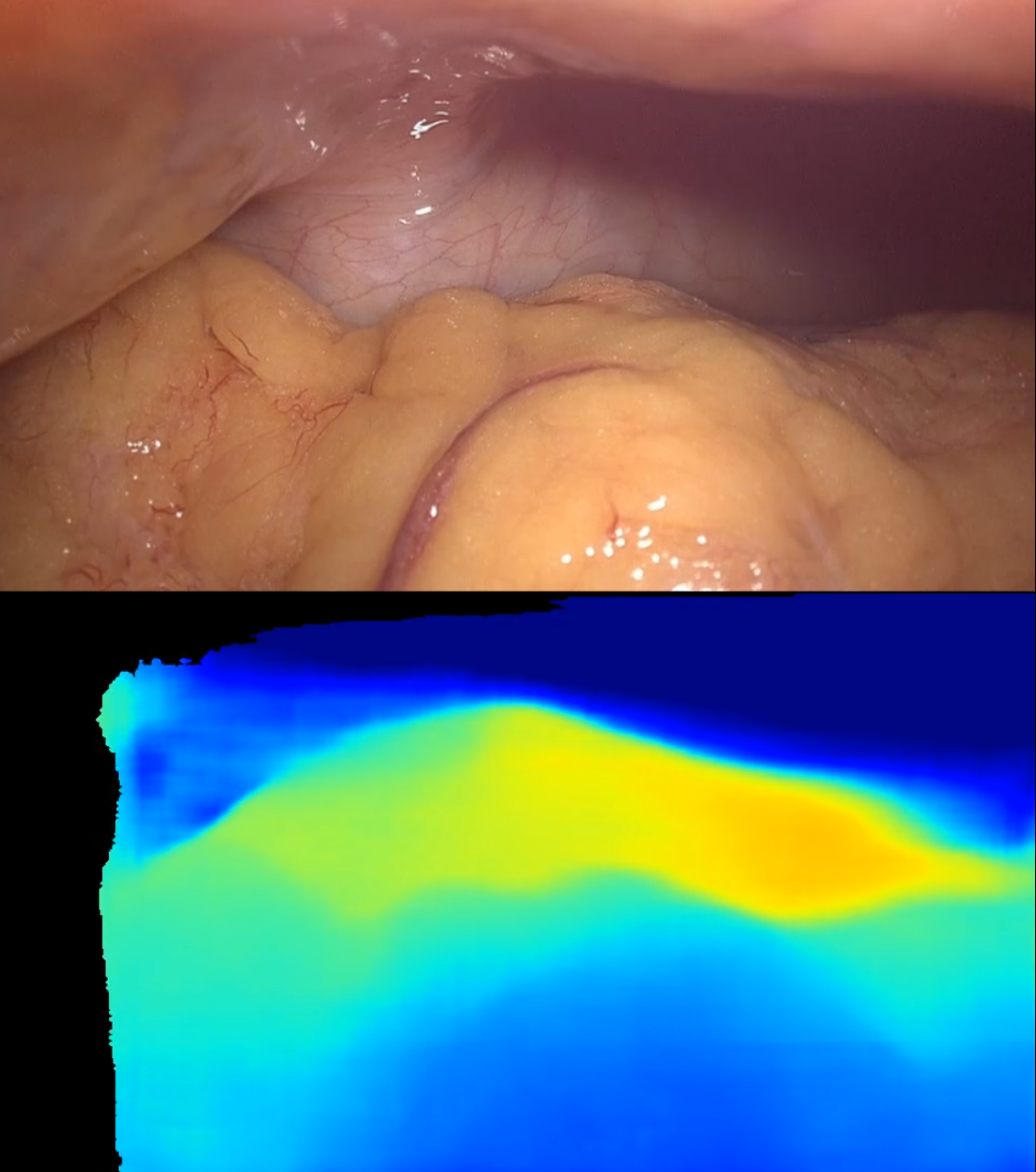

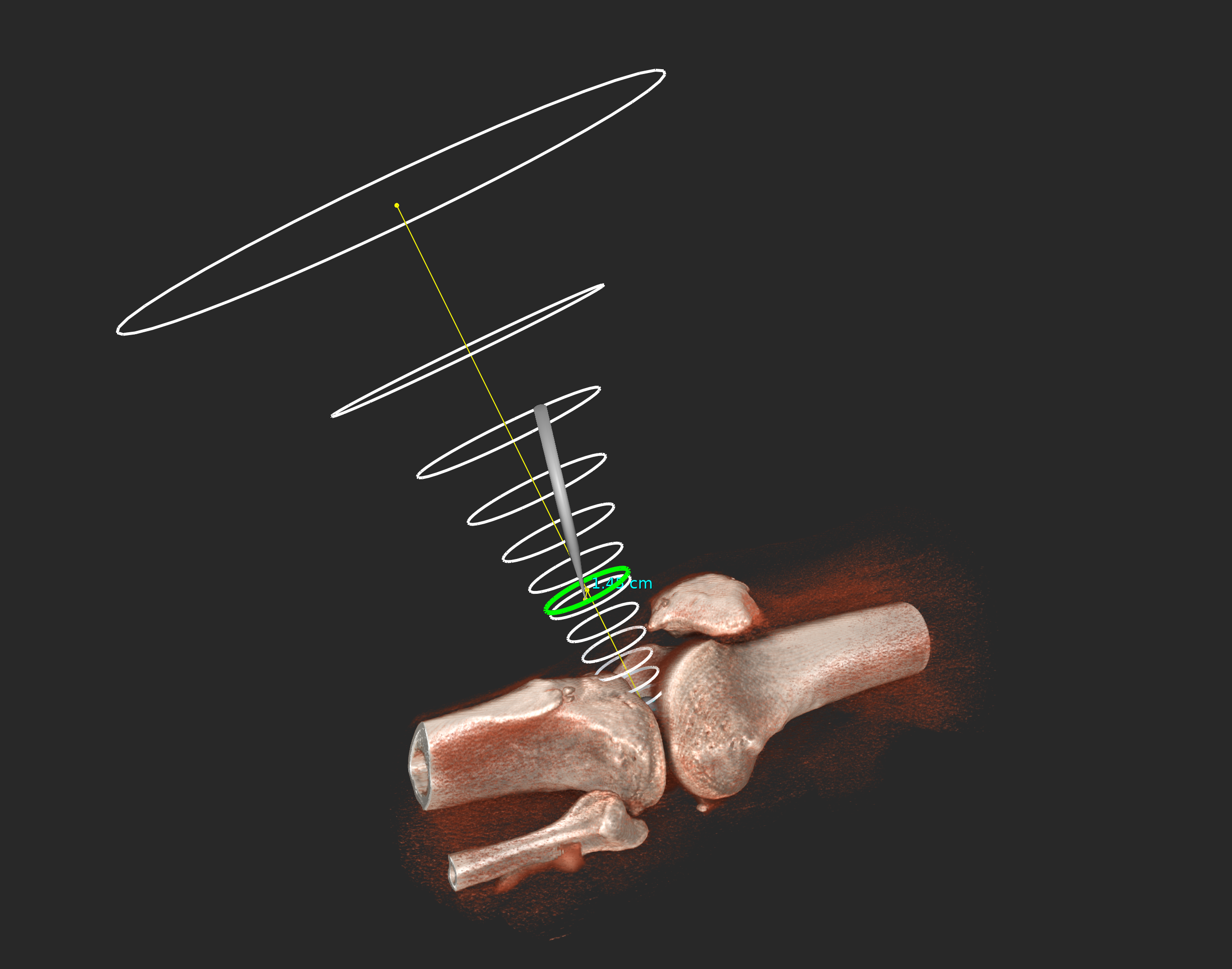

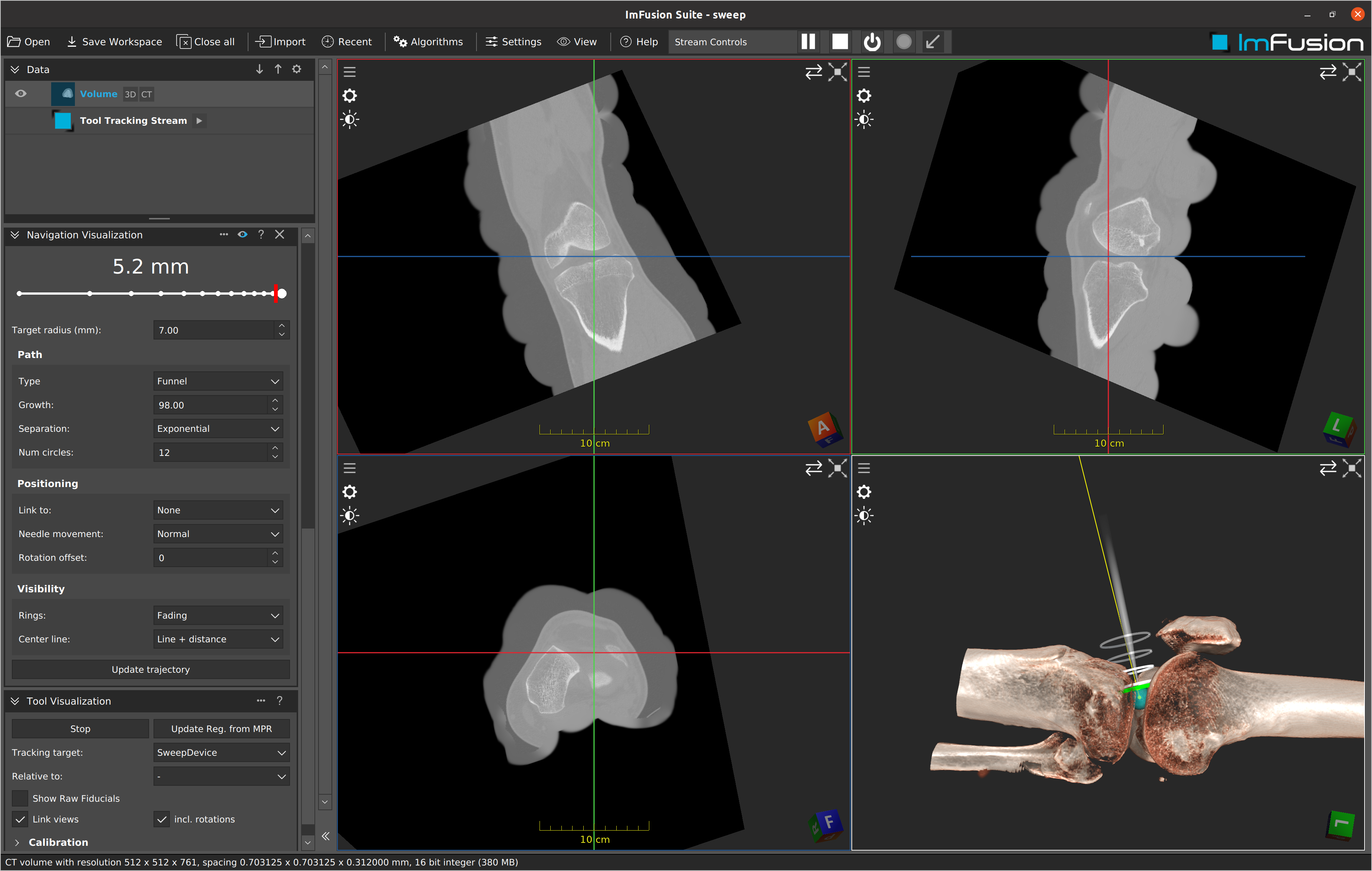

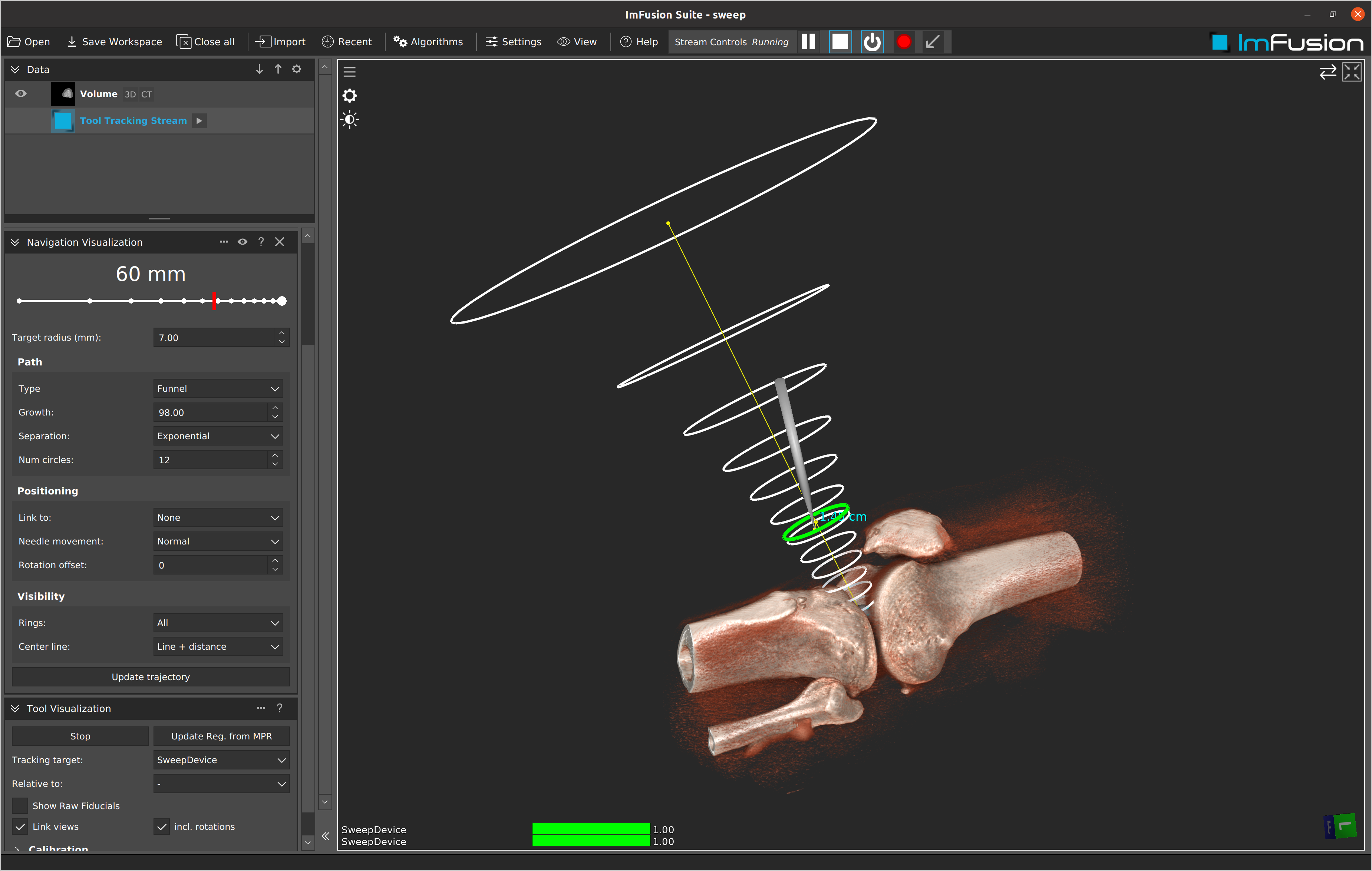

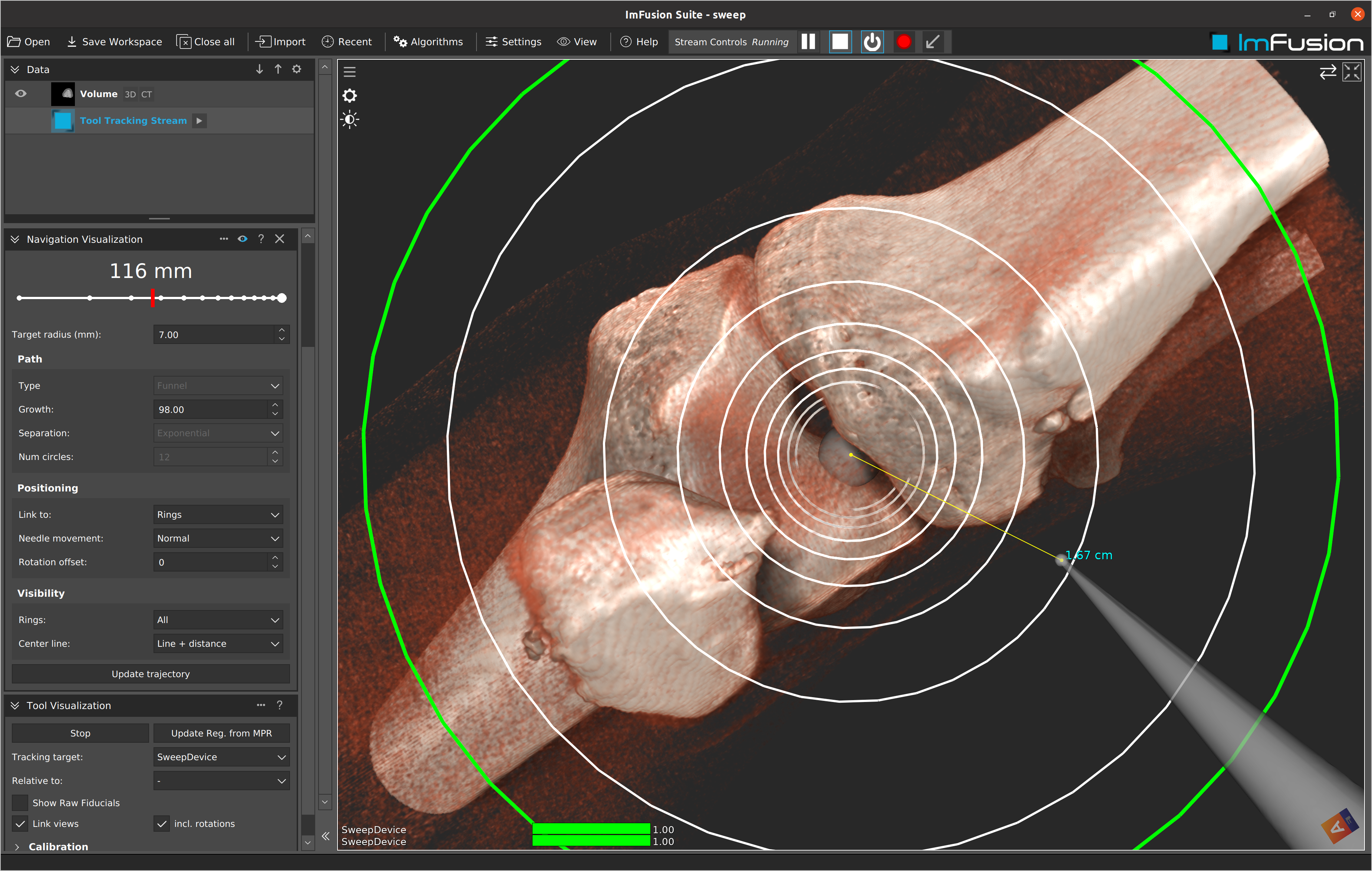

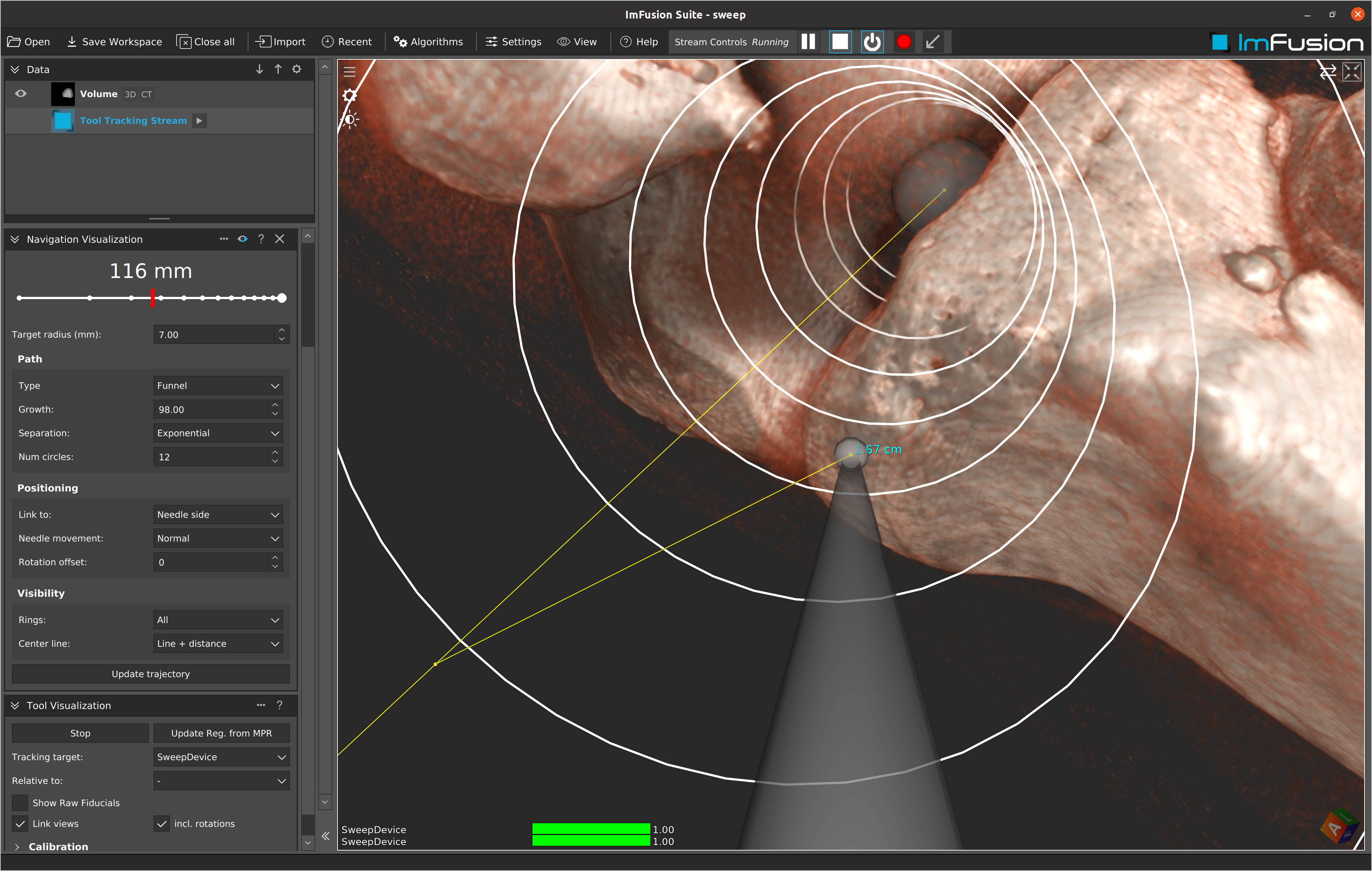

Various visualization techniques can then be employed for 3D guidance during the intervention. While tool-in-hand scenarios, for instance when manipulating a tracked tool, require a fixed scene, eye-in-hand scenarios such as tracked ultrasound probes necessitate geometrically correct blending of pre-interventional imaging data into a moving scene. In either case, the ImFusion Suite is able to provide 3D views as well as multi-modal, multi-planar reconstructions.